We often talk about data quality, especially when we’re about to start a new integration project to connect a website or app with a back-office CRM or other system, usually meaning we’ll uni- or bi-directionally sync data between multiple data repositories. Data quality plays a major role in many areas of our work; marketing campaign planning, informing decision making, optimising operations and business processes in general, and is becoming an increasingly popular topic of conversation.

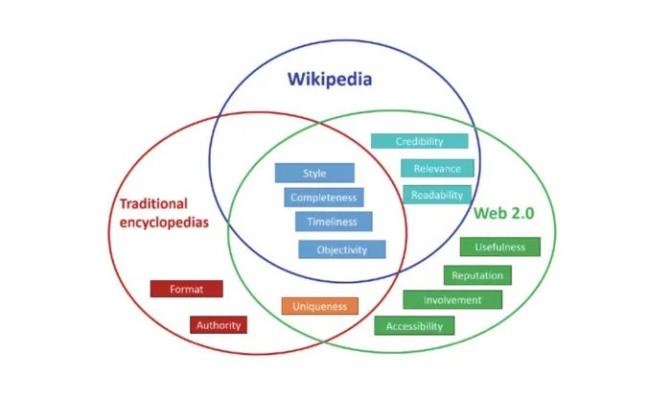

Wikipedia suggests that data is generally considered high quality if it is fit for [its] intended uses in operations, decision making and planning. They use this diagram to explain the different and most common quality dimensions used by traditional encyclopaedias (format, authority), wikipedia (style, completeness, timeliness, objectivity, credibility, relevance, and readability) and Web 2.0 (usefulness, reputation, involvement and accessibility), so a good overview of the approach from yesterday and today.

The important part of the Wiki description for us is ‘intended uses in operations’, as quite often we find that data is structured perhaps to support old processes, or maybe it was previously fit for purpose but hasn’t kept up with the pace of change or new processes.

[[{"fid":"294638","view_mode":"default","fields":{"format":"default","alignment":"","field_file_image_alt_text[und][0][value]":"Data quality Venn diagram","field_file_image_title_text[und][0][value]":"Data quality Venn diagram"},"link_text":false,"type":"media","field_deltas":{"1":{"format":"default","alignment":"","field_file_image_alt_text[und][0][value]":"Data quality Venn diagram","field_file_image_title_text[und][0][value]":"Data quality Venn diagram"}},"attributes":{"alt":"Data quality Venn diagram","title":"Data quality Venn diagram","class":"media-element file-default","data-delta":"1"}}]]

Granite 5’s view is that good quality data is sensibly structured, easy to understand and consistent. We often say that if data or logic can’t be communicated easily in an Excel document without annotations and notes then the data quality isn’t good. But while data is of course about rows and columns of raw data, data quality is often measured on how data is used, so more focused on processes. For this reason we often review our clients' business processes, to make sure that their data enables them to work how they’d like to, not necessarily how they’ve always worked before.

We would like to share some of our experiences on data quality, and how this can impact your organisation:

Products

One area we often find significant room for improvement is with product data, especially when importing or synchronising data from stock, fulfilment, or order management systems. Hurdles we often have to overcome include:

Product variations as separate products and SKU’s instead of for example a single product with variations – ie SKU001-blk

A single ‘size’ field used for different types of measurement; S M L XL, 120mm, 14Kg, 12v, size 10

Incorrect use of fields for example using ‘title’ for post nominals resulting in Dear OBE Smith

Manually specifying prices in multiple currencies, making it difficult to quickly adjust exchange rates

Products being managed in Salesforce or another CRM that doesn’t support multiple images, variations, or semi-complex categorisation.

Contacts

Undoubtedly the most common data type we work with is personal contact information, specifically that from customer relationship management (CRM) platforms like HubSpot, Salesforce, Sugar CRM, ACT, MS Dynamics and Zoho – or even Excel or in .CSV format.

If the data is being used for marketing or providing customer services, then consistency, completion and segmentation are key to having good quality data. Only when data / contacts can be segmented can you truly send targeted communication; one message isn’t suitable for all!

One of our most surprising discoveries was finding an email marketing database that didn’t have an email address for every contact, maybe the result of a mass compliance data purge perhaps, but worrying that potentially hot leads have not been contacted using the appropriate channels. Receiving inappropriate / mis-targeted mailings is a result or poor data quality, or sometimes laziness.

This data set is often subject to lengthy data cleansing and de-duping processes in an effort to achieve really good quality and therefore useful data.

Structure

One question we love to ask is ‘why do you capture that data?’, answers typically include ‘because I was told to’, ‘we’ve always done it this way’ or ‘that’s how the system is setup’, but quite often it's not the answer we’re looking for. There should be a clear reason for any data to captured, and a clear process for how it’s going to be used. If you’re not sure why data is gathered, we recommend reviewing your data sets and processes to learn about what data is captured and how it’s used, or better still, how it should or could be used.

A data mapping process will help to identify problems, a detailed review will highlight gaps where important data is missing, bloat or unnecessary data, confusing or contradictory data, or legacy data that has no use.

[[{"fid":"294640","view_mode":"default","fields":{"format":"default","alignment":"","field_file_image_alt_text[und][0][value]":"Data quality schema","field_file_image_title_text[und][0][value]":"Data quality schema"},"link_text":false,"type":"media","field_deltas":{"2":{"format":"default","alignment":"","field_file_image_alt_text[und][0][value]":"Data quality schema","field_file_image_title_text[und][0][value]":"Data quality schema"}},"attributes":{"alt":"Data quality schema","title":"Data quality schema","style":"height: 522px; width: 700px;","class":"media-element file-default","data-delta":"2"}}]]

Automation

It’s really important that you have confidence in your data quality before thinking about automation, otherwise you’ll miss valuable opportunities, potentially damage existing relationships or worse, fail compliance.

You should easily be able to extract a list of attendees from your last event and segment them into customers and non-customers for example, if not then you’re not quite ready to start automating your processes for next year.

Whether you’re looking to automate a process after a user registers for an event, like sending reminders and follow up surveys, or perhaps wishing to keep in touch with loyal customers, you can be more agile, targeted and efficient if your data quality is fit for purpose.

Compliance

We couldn’t finish an article about data without mentioning GDPR. The reality however is that much of the legislation has always been in place under older DMA, ICO and data protection guidelines; GDPR has simply reminded us of their importance.

Some of the common compliance issues we uncover with data include:

Being unable to trace the original source of your data

Knowing which version of your policies a data subject has accepted

Where data is stored, especially when using cloud services, ie Office 365, Sharepoint, Dropbox

Not including offsite backups in risk assessments

Data security not being taken seriously

Knowing exactly who has access to your data and how it’s used from department to department

Treating all data equally

Fragmented processes and policies

Outsourcing responsibility

Keeping legacy data.

Hopefully you’ve found this data quality article useful, it can be a really complex and time-intensive area, but we love working with our clients to find the easiest and most feasible, sometimes automated routes to improving data quality which often also has a positive impact on your organisation's processes, security and efficiency.